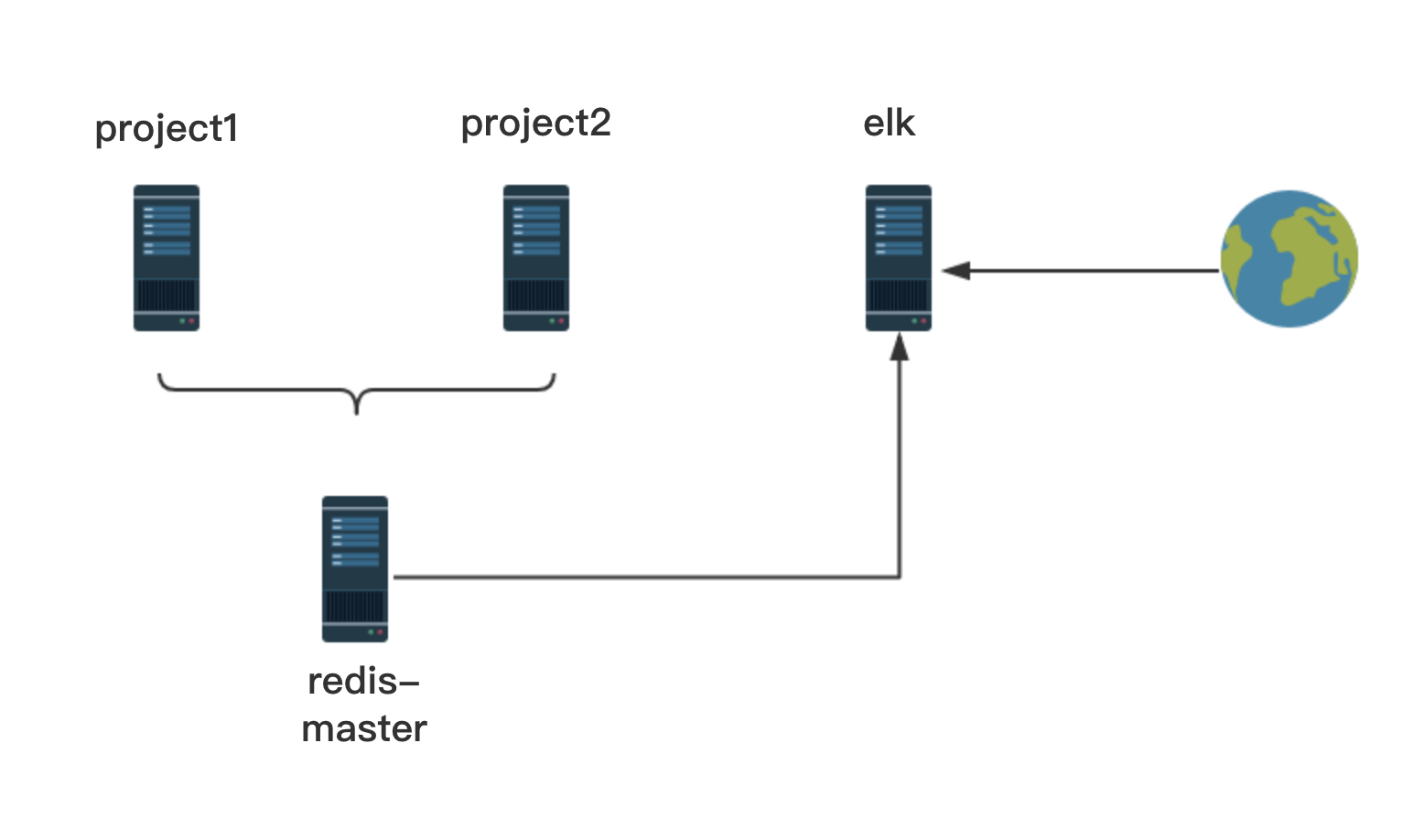

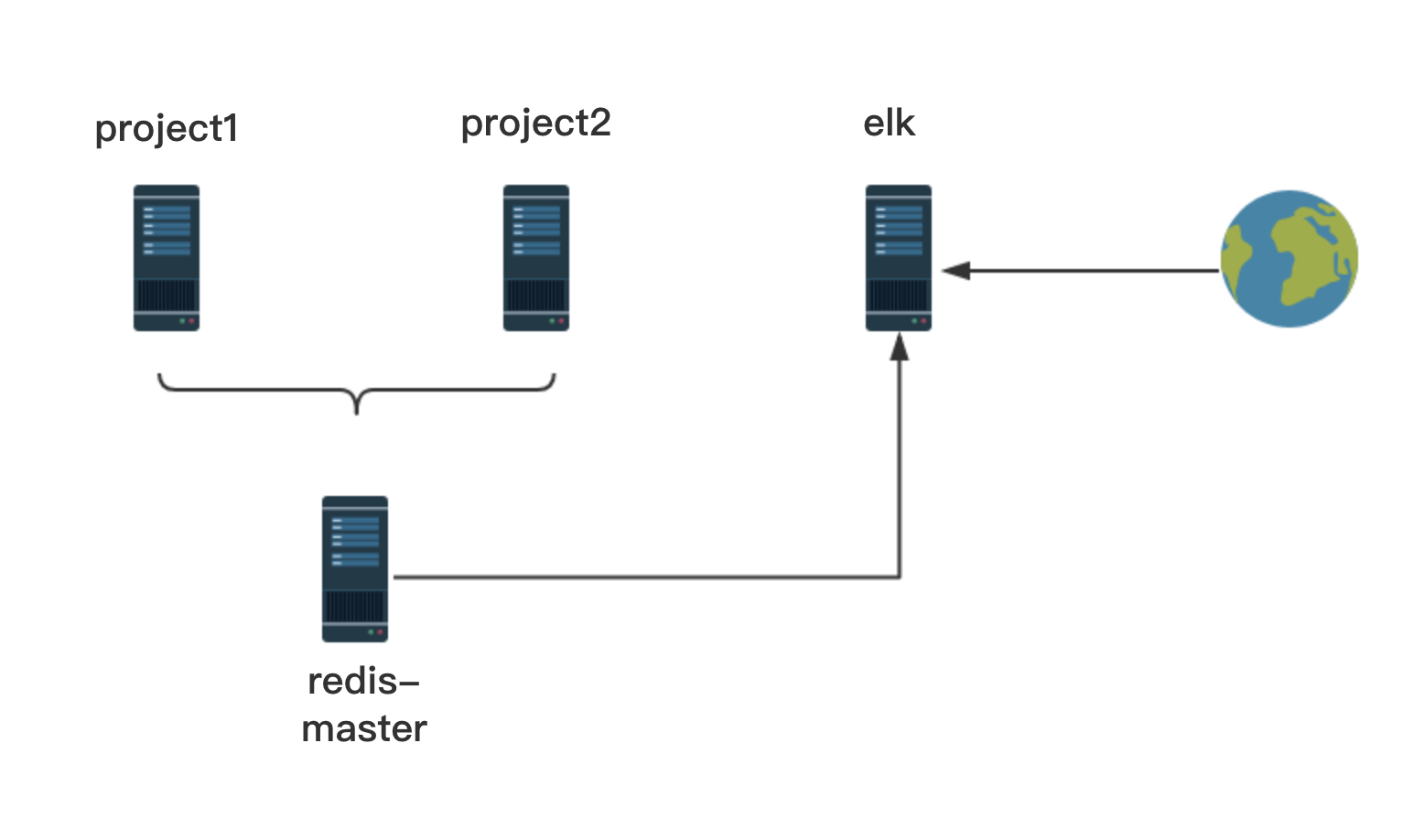

环境说明:

真实机IP:192.168.1.198,其中

| 服务器 |

IP |

说明 |

| Redis-server |

172.17.0.3 |

nginx服务器1 |

| Project1(logstash) |

172.17.0.2 |

nginx服务器2 |

| Project2(logstash) |

172.17.0.3 |

服务器1 |

| Elk |

172.17.0.6 |

服务器2 |

1

2

3

4

5

6

7

| docker run -it -d --privileged=true --name redis-master -p 63791:6379 -p 221:22 -v /Users/zhimma/Data/www/:/data/www/ 67793c412ed1 /usr/sbin/init

docker run -it -d --privileged=true --name project1 -p 50441:5044 -p 8081:80 -p 10241:1024 -p 222:22 -v /Users/zhimma/Data/www/:/data/www/ 67793c412ed1 /usr/sbin/init

docker run -it -d --privileged=true --name project2 -p 50442:5044 -p 8082:80 -p 10242:1024 -p 223:22 -v /Users/zhimma/Data/www/:/data/www/ 67793c412ed1 /usr/sbin/init

docker run -it -d --privileged=true --name elk -p 50443:5044 -p 15602:5601 -p 224:22 -p 8083:80 -p 10243:1024 -v /Users/zhimma/Data/www/:/data/www/ 67793c412ed1 /usr/sbin/init

|

1

2

3

4

5

6

7

8

9

10

11

12

|

docker ps

CONTAINER ID PORTS NAMES

3f139b00a661 3306/tcp, 6379/tcp, 0.0.0.0:224->22/tcp, 0.0.0.0:8083->80/tcp, 0.0.0.0:10243->1024/tcp, 0.0.0.0:50443->5044/tcp, 0.0.0.0:15602->5601/tcp

elk

e08ca55e124d 3306/tcp, 6379/tcp, 0.0.0.0:223->22/tcp, 0.0.0.0:8082->80/tcp, 0.0.0.0:10242->1024/tcp, 0.0.0.0:50442->5044/tcp

project2

3b670e7a9ad1 3306/tcp, 6379/tcp, 0.0.0.0:222->22/tcp, 0.0.0.0:8081->80/tcp, 0.0.0.0:10241->1024/tcp, 0.0.0.0:50441->5044/tcp

project1

dbefa01b3393 80/tcp, 3306/tcp, 0.0.0.0:221->22/tcp, 0.0.0.0:63791->6379/tcp

redis-master

|

所有服务器关闭防火墙

将软件和配置文件放在宿主机目录,各个容器就可以共享使用了

redis-master安装logstash

服务器安装redis,进行配置,开机启动

project服务器安装logstash

安装java环境

###下载logstash

1

2

3

4

5

| mkdir /opt/downloads

mkdir /opt/soft

cd /opt/downloads

wget https://artifacts.elastic.co/downloads/logstash/logstash-6.4.1.tar.gz

tar -zxvf logstash-6.4.1.tar.gz -C /opt/soft/

|

配置logstash

1

2

3

4

| vi /opt/soft/logstash-6.4.0/config/jvm.options

-Xms2g

-Xmx2g

|

安装配置supervisor

参考:https://blog.csdn.net/donggege214/article/details/80264811

1

2

3

4

5

6

| [unix_http_server]

file=/var/run/supervisor/supervisor.sock

chmod=0700

chown=root:root

[include]

files = supervisord.d

|

vi /etc/supervisord/l.conf

1

2

3

4

5

6

7

8

| [program:elk-l]

command=/opt/soft/logstash-6.4.0/bin/logstash -r -f /data/www/elk/conf/project/*.conf

autostart=true

autorestart=true

user=root

redirect_stderr=true

stdout_logfile=/var/log/elk/l.log

priority=10

|

vi /data/www/elk/conf/project/project.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

| input {

file {

path => [ "/data/www/project-mdl/trunk/Common/Runtime/Apps/*.log" ]

start_position => "beginning"

ignore_older => 0

sincedb_path => "/dev/null"

type => "Api"

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}

}

}

filter {

}

output {

if [type] == "Api" {

redis {

host => '192.168.1.198'

port => '63791'

db => '1'

data_type => "list"

key => "project"

}

}

stdout { codec => rubydebug }

}

|

重启supervisor,如果数据写入redis-master服务器,那么就代表项目日志收集成功

ELK服务器

安装java环境

安装elk

1

2

3

4

5

6

7

8

9

| mkdir /opt/downloads

mkdir /opt/soft

cd /opt/downloads

wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.4.1.tar.gz

wget https://artifacts.elastic.co/downloads/kibana/kibana-6.4.1-linux-x86_64.tar.gz

wget https://artifacts.elastic.co/downloads/logstash/logstash-6.4.1.tar.gz

tar -zxvf logstash-6.4.1.tar.gz -C /opt/soft/

tar -zxvf elasticsearch-6.4.1.tar.gz -C /opt/soft/

tar -zxvf kibana-6.4.1-linux-x86_64.tar.gz -C /opt/soft/

|

配置elk

创建elastic用户

由于 Elasticsearch 不允许也不推荐使用 root 用户来运行,因此需要新建一个用户来启动 Elasticsearch。

1

2

| adduser elastic #创建elastic用户

passwd elastic #修改elastic密码

|

创建ES数据日志文件夹

1

2

3

4

5

| cd /data/www/elk

mkdir data #创建数据目录

mkdir log #创建日志目录

mkdir bak #创建备份目录

chown -R elatic /data/www/elk/ #修改目录拥有者为 elastic

|

优化文件句柄数以及用户可用进程数

新版 Elasticsearch 要求其可用的文件句柄至少为 65536,同时要求其进程数限制至少为 2048,可用按照下面的指令进行修改。

分别对应以下两个报错信息:

- max file descriptors [4096] for elasticsearch process is too low, increase to at least [65536];

- max number of threads [1024] for user [es] is too low, increase to at least [2048]。

1

2

3

4

5

6

7

8

| vim /etc/security/limits.conf

* soft nofile 655350

* hard nofile 655350

* soft nproc 4096

* hard nproc 8192

elastic soft memlock unlimited

elastic hard memlock unlimited

|

修改内核交换

为了避免不必要的磁盘和内存交换,影响效率,需要将 vm.swappiness 修改为 1。

此外需要修改最大虚拟内存 vm.max_map_count 防止启动时报错:max virtual memory areas vm.max_map_count [65530] likely too low, increase to at least [262144]

1

2

3

4

5

6

| vim /etc/sysctl.conf

vm.swappiness = 1

vm.max_map_count = 655360

sysctl -p # 立即生效

|

关闭swap并且重启

配置 Elasticsearch 内存占用

1

2

3

4

5

| cd /opt/soft/elasticsearch-6.4.1/config/

vim jvm.options

-Xms2g

-Xmx2g

|

配置 Elasticsearch配置文件

1

2

3

4

5

6

7

8

| [root@3f139b00a661 ~]# grep -n '^[a-z]' /opt/soft/elasticsearch-6.4.0/config/elasticsearch.yml

17:cluster.name: elk-demo

33:path.data: /data/www/elk/data

37:path.logs: /data/www/logs

43:bootstrap.memory_lock: false

55:network.host: 0.0.0.0

59:http.port: 9200

|

安装配置supervisor

grep -n '^[a-z]' /etc/supervisord.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

| 4:file=/var/run/supervisor/supervisor.sock ; (the path to the socket file)

5:chmod=0700 ; sockef file mode (default 0700)

6:chown=root:root ; socket file uid:gid owner

16:logfile=/var/log/supervisor/supervisord.log ; (main log file;default $CWD/supervisord.log)

17:logfile_maxbytes=50MB ; (max main logfile bytes b4 rotation;default 50MB)

18:logfile_backups=10 ; (num of main logfile rotation backups;default 10)

19:loglevel=info ; (log level;default info; others: debug,warn,trace)

20:pidfile=/var/run/supervisord.pid ; (supervisord pidfile;default supervisord.pid)

21:nodaemon=false ; (start in foreground if true;default false)

22:minfds=1024 ; (min. avail startup file descriptors;default 1024)

23:minprocs=200 ; (min. avail process descriptors;default 200)

37:supervisor.rpcinterface_factory = supervisor.rpcinterface:make_main_rpcinterface

40:serverurl=unix:///var/run/supervisor/supervisor.sock ; use a unix:// URL for a unix socket

129:files = /etc/supervisord.d/elk.conf

|

grep '^[a-z]' /etc/supervisord.d/elk.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| command=/opt/soft/elasticsearch-6.4.0/bin/elasticsearch

autostart=true

autorestart=true

user=elastic

redirect_stderr=true

stdout_logfile=/var/log/elk/e.log

priority=1

command=/opt/soft/logstash-6.4.0/bin/logstash -r -f /data/www/elk/conf/elk/*.conf

autostart=true

autorestart=true

user=elastic

redirect_stderr=true

stdout_logfile=/var/log/elk/l.log

priority=10

command=/opt/soft/kibana-6.4.0-linux-x86_64/bin/kibana

autostart=true

autorestart=true

user=elastic

redirect_stderr=true

stdout_logfile=/var/log/elk/k.log

priority=20

|

cat /data/www/elk/conf/elk/elk.conf

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

| input {

redis {

host => '192.168.1.198'

port => 63791

db => 1

data_type => "list"

key => "project"

}

stdin {

codec => multiline {

pattern => "^\["

negate => true

what => "previous"

}

}

}

filter {

}

output {

elasticsearch {

hosts => [ "127.0.0.1:9200" ]

index => "project"

}

stdout { codec => rubydebug }

}

|